The Dark Side of AI: Incriminating Confessions and Privacy Concerns

In the early hours of August 28, a seemingly tranquil car park at a Missouri college turned into the scene of chaos as a vandalism spree left 17 cars damaged. Over a short period, the vehicles were found with shattered windows, broken mirrors, and extensive body damage, resulting in tens of thousands of dollars in repairs.

Investigation and Incriminating Chats

Following a month-long investigation, authorities compiled various pieces of evidence, including shoe prints, witness accounts, and security footage. However, it was an unexpected confession via ChatGPT, an AI chatbot, that became pivotal in charging 19-year-old college student Ryan Schaefer.

ChatGPT as a Confessional Tool

In his discussions with the AI shortly after the incident, Schaefer reflected on the damage he caused, asking, “how f**ked am I bro?.. What if I smashed the shit outta multiple cars?” This scenario marks a notable first in which an individual has purportedly implicated themselves through an AI platform, raising serious questions about privacy and self-incrimination.

AI in High-Profile Crimes

Less than a week after the Missouri incident, ChatGPT was implicated again in a more severe case involving 29-year-old Jonathan Rinderknecht. He was arrested for allegedly igniting the Palisades Fire in California, which resulted in numerous fatalities and widespread destruction. Reports indicated he sought the AI’s assistance in generating images of a burning city.

Legal and Privacy Implications

These cases underscore a concerning trend where AI technology could potentially serve as tools for both law enforcement and criminal activity. OpenAI’s CEO, Sam Altman, noted that users currently lack legal protections regarding their conversations with such chatbots, spotlighting both the privacy risks and the intimate details individuals willingly share.

The Role of AI in Personal Contexts

AI technologies, including ChatGPT, offer various functionalities—from editing images to deciphering intricate documents. As people increasingly seek medical advice, shopping recommendations, and role-playing suggestions from AI, it’s crucial to recognize the substantial personal data these platforms can accumulate and analyze.

Exploiting Sensitive Data

In this evolving landscape, companies leveraging AI are tapping into vast reservoirs of personal information. For instance, Meta announced its intent to utilize interactions with its AI tools for targeted advertising across platforms such as Facebook and Instagram. Users will have no choice to opt out, raising ethical concerns about data privacy and consumer exploitation.

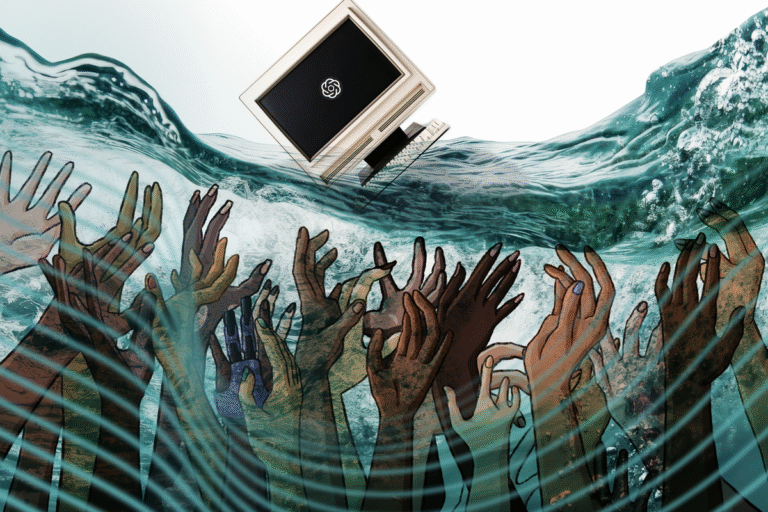

Privacy at a Crossroads

The interplay between convenience and personal privacy in the age of AI warrants closer scrutiny. The recent incidents, along with broader data-harvesting practices, evoke comparisons to past scandals, prompting a renewed focus on protecting consumer data rights. With over a billion individuals now engaging with AI applications, the phrase “if you’re not paying for a service, you are the product” may need to evolve, reflecting a new reality where users could become unwitting prey.